I like using Terminal, it is usually much more efficient and a lot quicker then the normal methods you use on your Mac. This post is about one widely used tool on UNIX and Linux systems, wget. Wget is a small program that can download files and folders to your computer through Terminal. Using Wget Command to Download Single Files. One of the most basic wget command examples is.

VPS

Dedicated

WP Professional

WP Professional Plus

The wget command is an internet file downloader that can download anything from files and web pages all the way through to entire websites.

Basic Usage

The wget command is in the format of:

For example, in its most basic form, you would write a command something like this:

This will download the filename.zip file from www.domain.com and place it in your current directory.

Redirecting Output

The -O option sets the output file name. If the file was called filename-4.0.1.zip and you wanted to save it directly to filename.zip you would use a command like this:

The wget program can operate on many different protocols with the most common being ftp:// and http://.

Downloading in the background.

If you want to download a large file and close your connection to the server you can use the command:

Downloading Multiple Files

If you want to download multiple files you can create a text file with the list of target files. Each filename should be on its own line. You would then run the command:

You can also do this with an HTML file. If you have an HTML file on your server and you want to download all the links within that page you need add --force-html to your command.

To use this, all the links in the file must be full links, if they are relative links you will need to add <base href='/support/knowledge_base/'> following to the HTML file before running the command:

Limiting the download speed

Usually, you want your downloads to be as fast as possible. However, if you want to continue working while downloading, you want the speed to be throttled.

To do this use the --limit-rate option. You would use it like this:

Continuing a failed download

If you are downloading a large file and it fails part way through, you can continue the download in most cases by using the -c option.

For example:

Normally when you restart a download of the same filename, it will append a number starting with .1 to the downloaded file and start from the beginning again.

Downloading in the background

If you want to download in the background use the -b option. An example of this is:

Checking if remote files exist before a scheduled download

If you want to schedule a large download ahead of time, it is worth checking that the remote files exist. The option to run a check on files is --spider.

In circumstances such as this, you will usually have a file with the list of files to download inside. An example of how this command will look when checking for a list of files is:

However, if it is just a single file you want to check, then you can use this formula:

Copy an entire website

If you want to copy an entire website you will need to use the --mirror option. As this can be a complicated task there are other options you may need to use such as -p, -P, --convert-links, --reject and --user-agent.

| -p | This option is necessary if you want all additional files necessary to view the page such as CSS files and images |

| -P | This option sets the download directory. Example: -P downloaded |

| --convert-links | This option will fix any links in the downloaded files. For example, it will change any links that refer to other files that were downloaded to local ones. |

| --reject | This option prevents certain file types from downloading. If for instance, you wanted all files except flash video files (flv) you would use --reject=flv |

| --user-agent | This option is for when a site has protection in place to prevent scraping. You would use this to set your user agent to make it look like you were a normal web browser and not wget. |

Using all these options to download a website would look like this:

TIP: Being Nice

It is always best to ask permission before downloading a site belonging to someone else and even if you have permission it is always good to play nice with their server. These two additional options will ensure you don’t harm their server while downloading.

This will wait 15 seconds between each page and limit the download speed to 50K/sec.

Downloading using FTP

If you want to download a file via FTP and a username and password is required, then you will need to use the --ftp-user and --ftp-password options.

An example of this might look like:

Retry

If you are getting failures during a download, you can use the -t option to set the number of retries. Such a command may look like this:

You could also set it to infinite retries using -t inf.

Recursive down to level X

If you want to get only the first level of a website, then you would use the -r option combined with the -l option.

For example, if you wanted only the first level of website you would use:

Setting the username and password for authentication

If you need to authenticate an HTTP request you use the command:

wget is a very complicated and complete downloading utility. It has many more options and multiple combinations to achieve a specific task. For more details, you can use the man wget command in your terminal/command prompt to bring up the wget manual. You can also find the wget manual here in webpage format.

Was this article helpful?

Related Articles

There are many reasons why you should consider downloading entire websites. Not all websites remain up for the rest of their lives. Sometimes, when websites are not profitable or when the developer loses interest in the project, (s)he takes the website down along with all the amazing content found there. There are still parts of the world where the Internet is not available at all times or where people do not have access to Internet 24×7. Offline access to websites can be a boon to these people.

Either way, it is a good idea to save important websites with valuable data offline so that you can refer to it whenever you want. It is also a time saver. You won’t need an Internet connection and never have to worry about the website shutting down. There are many software and web services that will let you download websites for offline browsing.

Let’s take a look at them below.

Also Read:Comparing 4 best Offline Maps Apps for Smartphones

Download Entire Website

1. HTTrack

This is probably one of the oldest worldwide web downloader available for the Windows platform. There is no web or mobile app version available primarily because, in those days, Windows was the most commonly used platform. The UI is dated but the features are powerful and it still works like a charm. Licensed under GPL as freeware, this open source website downloader has a light footprint.

You can download all webpages including files and images with all the links remapped and intact. Once you open an individual page, you can navigate the entire website in your browser, offline, by following the link structure. What I like about HTTrack is that it allows me to download only the part that is updated recently to my hard drive so I don’t have to download everything all over again. It comes with scan rules using which you can include or exclude file types, webpages, and links.

Pros:

- Free

- Open source

- Scan rules

Cons:

- Bland UI

2. SurfOnline

SurfOnline is another Windows-only software that you can use to download websites for offline use however it is not free. Instead of opening webpages in a browser like Chrome, you can browse downloaded pages right inside SurfOnline. Like HTTrack, there are rules to download file types however it is very limited. You can only select media type and not file type.

You can download up to 100 files simultaneously however the total number cannot exceed 400,000 files per project. On the plus side, you can also download password protected files and webpages. SurfOnline price begins at $39.95 and goes up to $120.

Pros:

- Scan rules

- CHM file support

- Write to CD

- Built-in browser

- Download password-protected pages

Cons:

- UI is dated

- Not free

- Limited scan rules

3. Website eXtractor

Another software to download websites that comes with its own browser. Frankly, I would like to stick with Chrome or something like Firefox. Anyway, Website eXtractor looks and works pretty similar to how the previous two website downloader we discussed. You can omit or include files based on links, name, media type, and also file type. There is also an option to download files, or not, based on directory.

One feature I like is the ability to search for files based on file extension which can save you a lot of time if you are looking for a particular file type like eBooks. The description says that it comes with a DB maker which is useful for moving websites to a new server but in my personal experience, there are far better tools available for that task.

The free version is limited to downloading 10,000 files after which it will cost you $29.95.

Pros:

- Built-in browser

- Database maker

- Search by file type

- Scan rules

Cons:

- Not free

- Basic UI

Also Read:Which is the best free offline dictionary for Android

4. Getleft

Getleft has a better and more modern UI when compared to the above website downloader software. It comes with some handy keyboard shortcuts which regular users would appreciate. Getleft is a free and open source software and pretty much stranded when it comes to development.

There is no support for secure sites (https) however you can set rules for downloading file types.

Pros:

- Open source

Cons:

- No development

5. SiteSucker

SiteSucker is the first macOS website downloader software. It ain’t pretty to look at but that is not why you are using a site downloader anyway. I am not sure whether it is the restrictive nature of Apple’s ecosystem or the developer wasn’t thinking ahead, but SiteSucker lacks key features like search and scan rules.

This means there is no way to tell the software what you want to download and what needs to be left alone. Just enter the site URL and hit Start to begin the download process. On the plus side, there is an option to translate downloaded materials into different languages. SiteSucker will cost you $4.99.

Pros:

- Language translator

Cons:

- No scan rules

- No search

6. Cyotek Webcopy

Cyotek Webcopy is another software to download websites to access offline. You can define whether you want to download all the webpages or just parts of it. Unfortunately, there is no way to download files based on type like images, videos, and so on.

Cyotek Webcopy uses scan rules to determine which part of the website you want to scan and download and which part to omit. For example, tags, archives, and so on. The tool is free to download and use and is supported by donations only. There are no ads.

7. Dumps (Wikipedia)

Wikipedia is a good source of information and if you know your way around, and follow the source of the information on the page, you can overcome some of its limitations. There is no need to use a website ripper or downloader get Wikipedia pages on your hard drive. Wikipedia itself offers Dumps.

These dumps are available in different formats including HTML, XML, and DVDs. Depending on your need, you can go ahead and download these files, or dumps, and access them offline. Note that Wikipedia has specifically requested users to not use web crawlers.

8. Teleport Pro

Most website downloaders/ rippers/crawlers are good at what they do until the number of requests exceeds beyond a certain number. If you are looking to crawl and download a big site with hundreds and thousands of pages, you will need a more powerful and stable software like Teleport Pro.

Priced $49.95, Teleport Pro is a high-speed website crawler and downloader with support for password-protected sites. You can search, filter, and download files based on the file type and keywords which can be a real time saver. Most web crawlers and downloaders do not support javascript which is used in a lot of sites. Teleport will handle it easily.

Pros:

- Javascript support

- Handle large sites

- Advanced scan rules

- FTP support

Cons:

- None

Using Wget To Download Websites On Mac Windows 10

9. Offline Pages Pro

This is an iOS app for iPhone and iPad users who are soon traveling to a region where Internet connectivity is going to be a luxury. Keeping this thought in mind, you can download and use Offline Pages Pro for $9.99, rather on the expensive side, to browse webpages offline.

The idea is that you can surf your favorite sites even when you are on a flight. The app works as advertised but do not expect to download large websites. In my opinion, it is better suited for small websites or a few webpages that you really need offline.

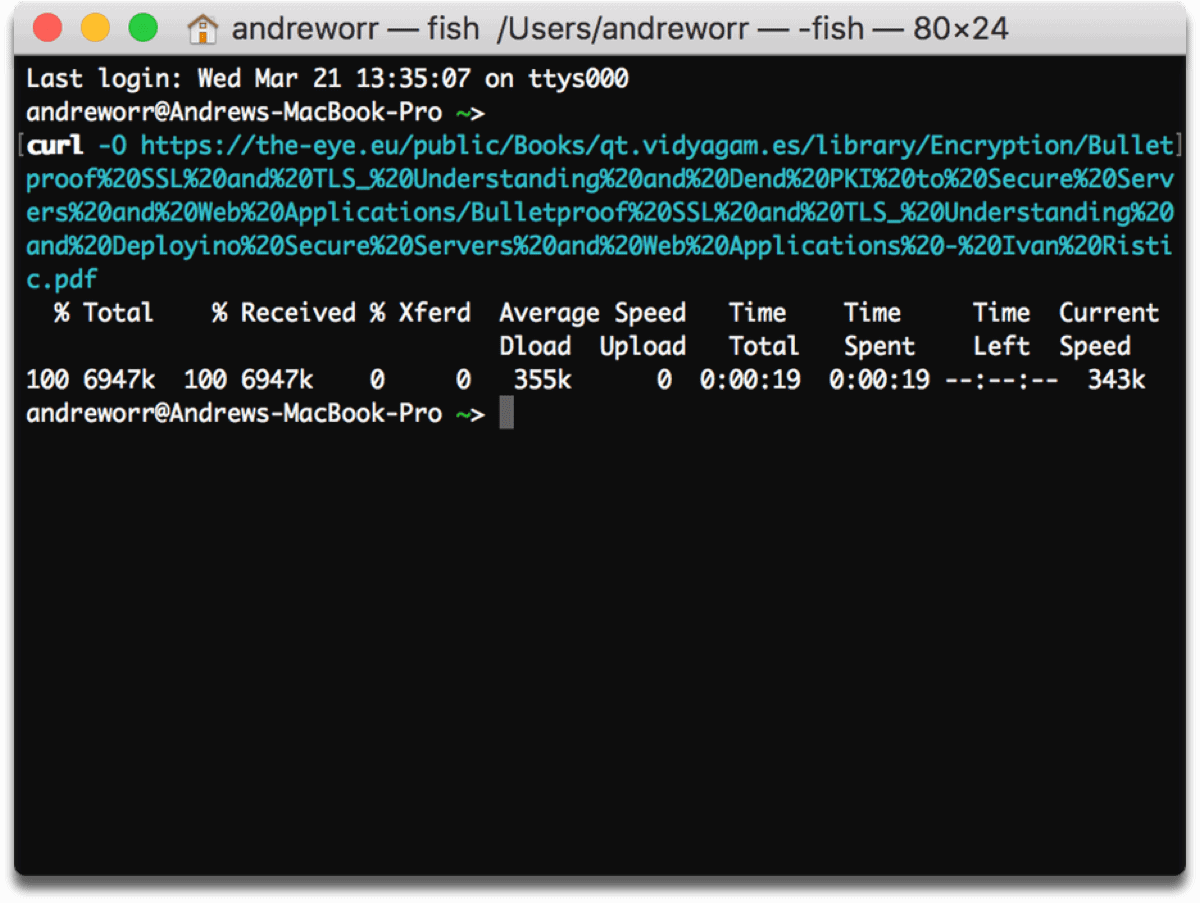

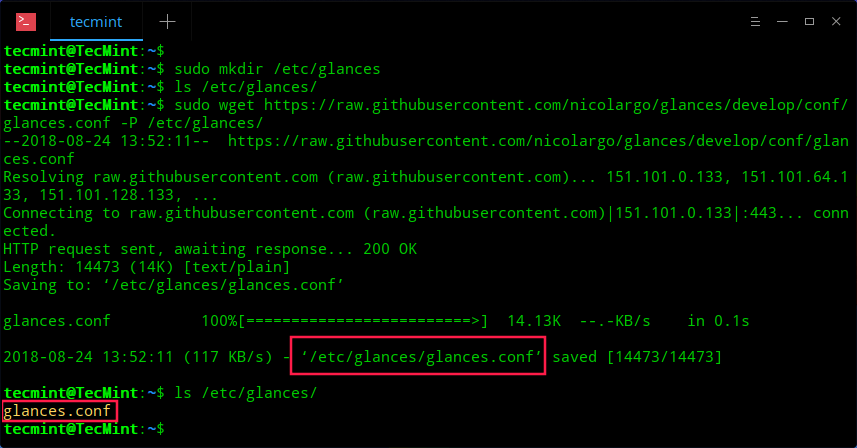

10. Wget

Wget (pronounced W get) is a command line utility for downloading websites. Remember the hacking scene from movie The Social Network, where Mark Zuckerberg downloads the pictures for his website Facemash? Yes, he used the tool Wget. It is available for Mac, Windows, and Linux.

Unlike other software. What makes Wget different from another download in this list, is that it not only lets you download websites, but you can also download YouTube video, MP3s from a website, or even download files that are behind a login page. That said, since it’s a command line tool, you will need to need some terminal expertise to use it. A simple Google search should do.

For example, the command – ‘wget www.example.com’ will download only the home page of the website. However, if you want the exact mirror of the website, include all the internal links and images, you can use the following command.

wget -m www.example.com

Pros:

- Available for Windows, Mac, and Linux

- Free and Open Source

- Download almost everything

Cons:

- Needs a bit knowledge of command line

Wrapping Up: Download Entire Website

Wget A Website

These are some of the best tools and apps to download websites for offline use. You can open these sites in Chrome, just like regular online sites, but without an active Internet connection. I would recommend HTTrack if you are looking for a free tool and Teleport Pro if you can cough up some dollars. Also, the latter is more suitable for heavy users who are into research and work with data day in day out. Wget is also another good option if you feel comfortable with command lines